Pytorch 学习率调整策略.

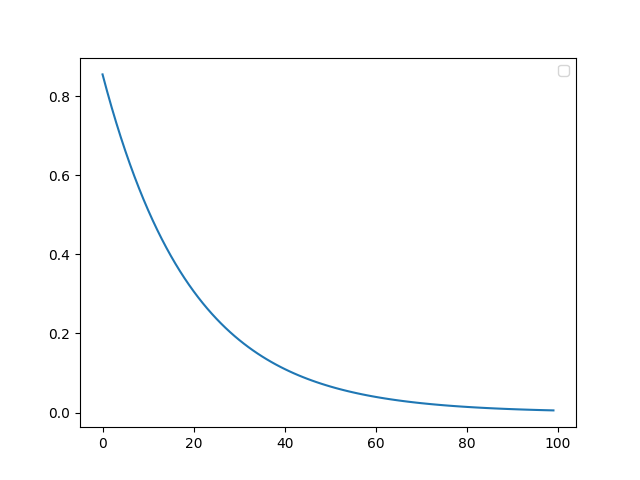

1. LambdaLR

1.1. 函数定义

torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda, last_epoch=-1, verbose=False)功能:将每个参数组的学习率设置为给定函数的初始lr倍. 当 last_epoch=-1 时,则设置初始 lr 作为 lr.

使用示例:

# Assuming optimizer has two groups.

lambda1 = lambda epoch: epoch // 30

lambda2 = lambda epoch: 0.95 ** epoch

scheduler = LambdaLR(optimizer, lr_lambda=[lambda1, lambda2])

for epoch in range(100):

train(...)

validate(...)

scheduler.step()1.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

lambda1 = lambda epoch: epoch // 30

lambda2 = lambda epoch: 0.95 ** epoch

scheduler = torch.optim.lr_scheduler.LambdaLR(optimizer, lr_lambda= lambda2)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='LambdaLR')

plt.savefig("lr_LambdaLR.png")

2. MultiplicativeLR

2.1. 函数定义

torch.optim.lr_scheduler.MultiplicativeLR(optimizer, lr_lambda, last_epoch=-1, verbose=False)功能:将每个参数组的学习率乘以指定函数中给定的因子.

使用示例如:

lmbda = lambda epoch: 0.95

scheduler = MultiplicativeLR(optimizer, lr_lambda=lmbda)

for epoch in range(100):

train(...)

validate(...)

scheduler.step()2.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

lmbda = lambda epoch: 0.95

scheduler = torch.optim.lr_scheduler.MultiplicativeLR(optimizer, lr_lambda= lmbda)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='MultiplicativeLR')

plt.savefig("lr_MultiplicativeLR.png")

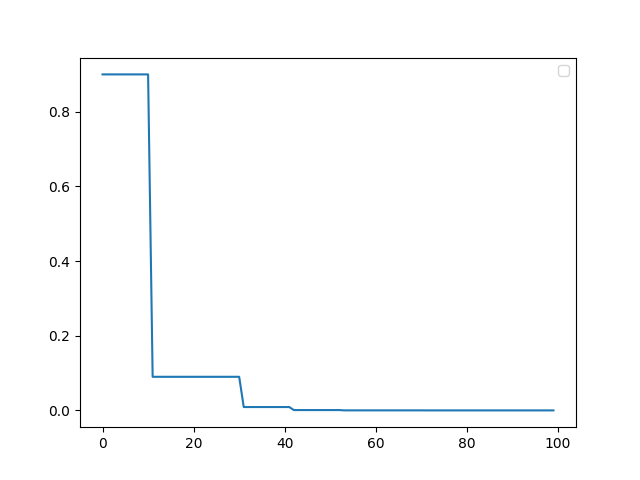

3. StepLR

3.1. 函数定义

torch.optim.lr_scheduler.StepLR(optimizer, step_size, gamma=0.1, last_epoch=-1, verbose=False)功能:在每个step_size epocs,通过gamma降低每个参数组的学习率.

示例如:

# Assuming optimizer uses lr = 0.05 for all groups

# lr = 0.05 if epoch < 30

# lr = 0.005 if 30 <= epoch < 60

# lr = 0.0005 if 60 <= epoch < 90

# ...

scheduler = StepLR(optimizer, step_size=30, gamma=0.1)

for epoch in range(100):

train(...)

validate(...)

scheduler.step()3.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=30, gamma=0.1)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='StepLR')

plt.savefig("lr_StepLR.png")

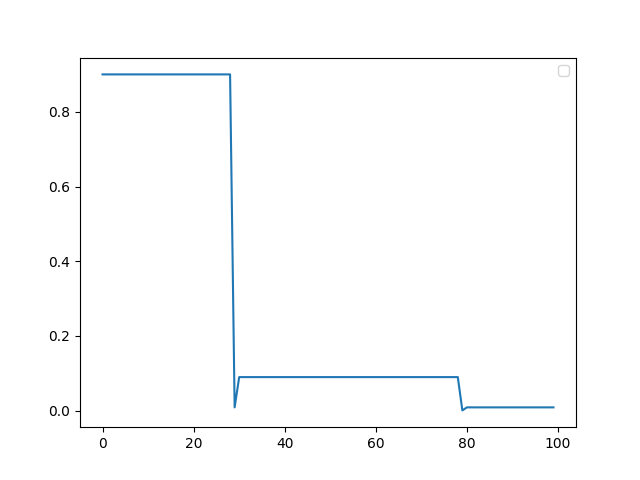

4. MultiStepLR

4.1. 函数定义

torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones, gamma=0.1, last_epoch=-1, verbose=False)功能:一旦 epochs 达到设定的参数,就以 gamma 衰减每个参数组的学习率.

使用示例如:

# Assuming optimizer uses lr = 0.05 for all groups

# lr = 0.05 if epoch < 30

# lr = 0.005 if 30 <= epoch < 80

# lr = 0.0005 if epoch >= 80

scheduler = MultiStepLR(optimizer, milestones=[30,80], gamma=0.1)

for epoch in range(100):

train(...)

validate(...)

scheduler.step()4.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.MultiStepLR(optimizer, milestones=[30,80], gamma=0.1)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='MultiStepLR')

plt.savefig("lr_MultiStepLR.png")

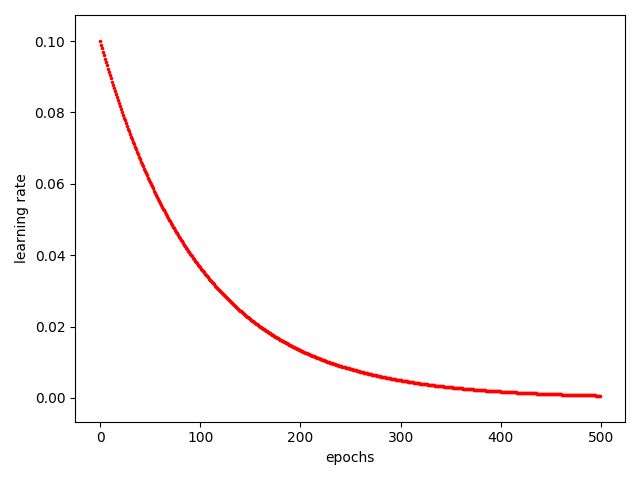

5. ExponentialLR

5.1. 函数定义

torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma, last_epoch=-1, verbose=False)功能:在每个时期以指数衰减每个参数组的学习率.

5.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.ExponentialLR(optimizer, gamma=0.1)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='ExponentialLR')

plt.savefig("lr_ExponentialLR.png")

6. CosineAnnealingLR

6.1. 函数定义

torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max, eta_min=0, last_epoch=-1, verbose=False)余弦退火调整学习率.

以余弦函数为周期,并在每个周期最大值时重新设置学习率. 以初始学习率为最大学习率,以 2xTmax2xTmax2xTmax 为周期,在一个周期内先下降,后上升.

其中,T_max 是一次学习率周期的迭代次数,即 T_max 个 epoch 之后重新设置学习率. eta_min 是最小学习率,即在一个周期中,学习率最小会下降到 eta_min,默认值为 0.

Cos学习率在初始学习率base_lr和最小值eta_min之间以余弦形式调整,在MAX_T个epochs时衰减到最小值.

6.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.CosineAnnealingLR(optimizer, T_max=20)

#

steps = []

lrs = []

for epoch in range(100):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='CosineAnnealingLR')

plt.savefig("lr_CosineAnnealingLR.png")

7. ReduceLROnPlateau

7.1. 函数定义

torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, mode='min', factor=0.1, patience=10, threshold=0.0001, threshold_mode='rel', cooldown=0, min_lr=0, eps=1e-08, verbose=False)使用示例:

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

scheduler = ReduceLROnPlateau(optimizer, 'min')

for epoch in range(100):

train(...)

val_loss = validate(...)

# Note that step should be called after validate()

scheduler.step(val_loss)7.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

lr = 0.9

optimizer = torch.optim.SGD(model.parameters(), lr=lr, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.ReduceLROnPlateau(optimizer, 'min')

#

steps = []

lrs = []

loss = [0.9, 0.6, 0.6, 0.5, 0.1]*20 #

for epoch in range(100):

scheduler.step(loss[epoch//20])

lrs.append(scheduler._last_lr)

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='ReduceLROnPlateau')

plt.savefig("lr_ReduceLROnPlateau.png")

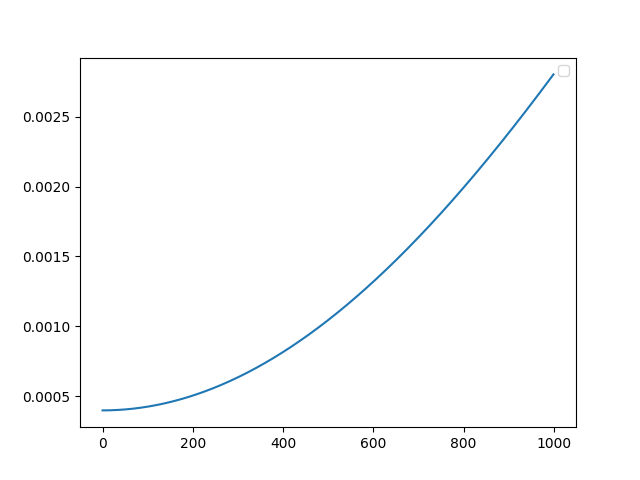

8. CyclicLR

8.1. 函数定义

torch.optim.lr_scheduler.CyclicLR(optimizer, base_lr, max_lr, step_size_up=2000, step_size_down=None, mode='triangular', gamma=1.0, scale_fn=None, scale_mode='cycle', cycle_momentum=True, base_momentum=0.8, max_momentum=0.9, last_epoch=-1, verbose=False)功能:根据周期性学习率策略设置每个参数组的学习率. 该策略以恒定的频率在两个边界之间循环学习率 .

Cyclic学习率在最大值和最小值之间来回弹跳.

使用示例:

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

scheduler = torch.optim.lr_scheduler.CyclicLR(optimizer, base_lr=0.01, max_lr=0.1)

data_loader = torch.utils.data.DataLoader(...)

for epoch in range(10):

for batch in data_loader:

train_batch(...)

scheduler.step()8.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

optimizer = torch.optim.SGD(model.parameters(), lr=0.01, momentum=0.9)

#

base_lr = 1e-4

max_lr = 5e-4

scheduler = torch.optim.lr_scheduler.CyclicLR(optimizer, base_lr, max_lr,

step_size_up=500,

step_size_down=500,

mode='triangular',

gamma=1.0, scale_fn=None,

scale_mode='cycle',

cycle_momentum=True,

base_momentum=0.8,

max_momentum=0.9)

#

steps = []

lrs = []

for epoch in range(1000):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='CyclicLR')

plt.savefig("lr_CyclicLR.png")

9. OneCycleLR

9.1. 函数定义

torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr, total_steps=None, epochs=None, steps_per_epoch=None, pct_start=0.3, anneal_strategy='cos', cycle_momentum=True, base_momentum=0.85, max_momentum=0.95, div_factor=25.0, final_div_factor=10000.0, three_phase=False, last_epoch=-1, verbose=False)功能:根据1cycle学习率策略设置每个参数组的学习率. OneCycleLR将学习速率从初始学习速率退火到某个最大学习速率,然后从该最大学习速率退火到某个远低于初始学习速率的最小学习速率.

使用示例:

data_loader = torch.utils.data.DataLoader(...)

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr=0.01, steps_per_epoch=len(data_loader), epochs=10)

for epoch in range(10):

for batch in data_loader:

train_batch(...)

scheduler.step()9.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

#

scheduler = torch.optim.lr_scheduler.OneCycleLR(optimizer, max_lr=0.01, steps_per_epoch=1000, epochs=10)

#

steps = []

lrs = []

for epoch in range(1000):

scheduler.step()

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='OneCycleLR')

plt.savefig("lr_OneCycleLR.png")

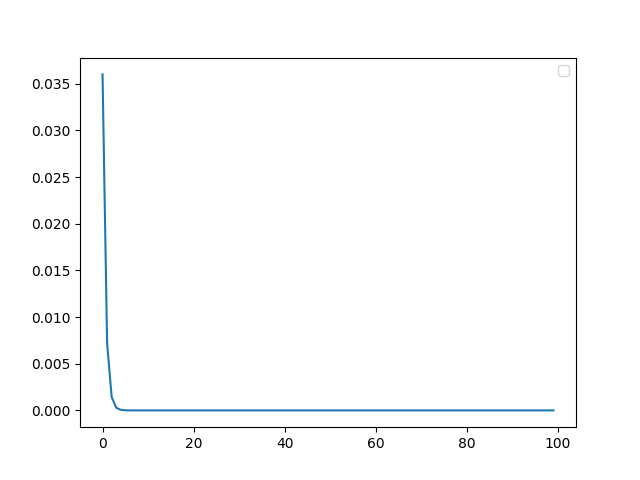

10. CosineAnnealingWarmRestarts

10.1. 函数定义

torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0, T_mult=1, eta_min=0, last_epoch=-1, verbose=False)功能:Cos学习率在衰减到最小值以后重新回到最大值,然后以更慢的速度衰减.

使用示例:

scheduler = CosineAnnealingWarmRestarts(optimizer, T_0, T_mult)

iters = len(dataloader)

for epoch in range(20):

for i, sample in enumerate(dataloader):

inputs, labels = sample['inputs'], sample['labels']

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

scheduler.step(epoch + i / iters)10.2. 可视化曲线

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

import torch.nn as nn

import torch

from torchvision.models import AlexNet

import matplotlib.pyplot as plt

#构建模型,以二分类模型为例

model = AlexNet(num_classes=2)

#设定优化子

optimizer = torch.optim.SGD(model.parameters(), lr=0.1, momentum=0.9)

#

T_0=10

T_mult=1

scheduler = torch.optim.lr_scheduler.CosineAnnealingWarmRestarts(optimizer, T_0, T_mult)

#

steps = []

lrs = []

for epoch in range(1000):

scheduler.step(epoch/20)

lrs.append(scheduler.get_lr()[0])

steps.append(epoch)

#

plt.figure()

plt.legend()

plt.plot(steps, lrs, label='CosineAnnealingWarmRestarts')

plt.savefig("lr_CosineAnnealingWarmRestarts.png")