原文:Limitations of Deep Learning in AI Research-2019.02.12

作者:ROBERTO IRIONDO

正文

人工智能(AI,Artificial Intelligence) 随着深度学习的发展,已经取得了令人惊叹的成绩,但是仍与人类认知能力有较大差距.

深度学习是机器学习的一个分支,在过去几年已经在很多实际应用场景中,已经取得了接近,甚至超过人类认知精度的水平. 其中,包括用户体验革新(revolutionizing customer experience), 机器翻译(machine translation),语言识别(language recognition), 自动驾驶(autonomous vehicles)、计算机视觉(computer vision), 文本生成(text generation), 语音理解(speech understanding),以及很多其它 AI 应用[2].

机器学习中,AI 基于机器学习算法从数据中进行学习;而深度学习中,AI 基于类似于人脑的神经网络结构对数据进行分析. 深度学习模型不需要算法指定要对数据进行的处理,这主要是归功于人类所收集和消费的大量数据,其进而送入深度学习模型中[3].

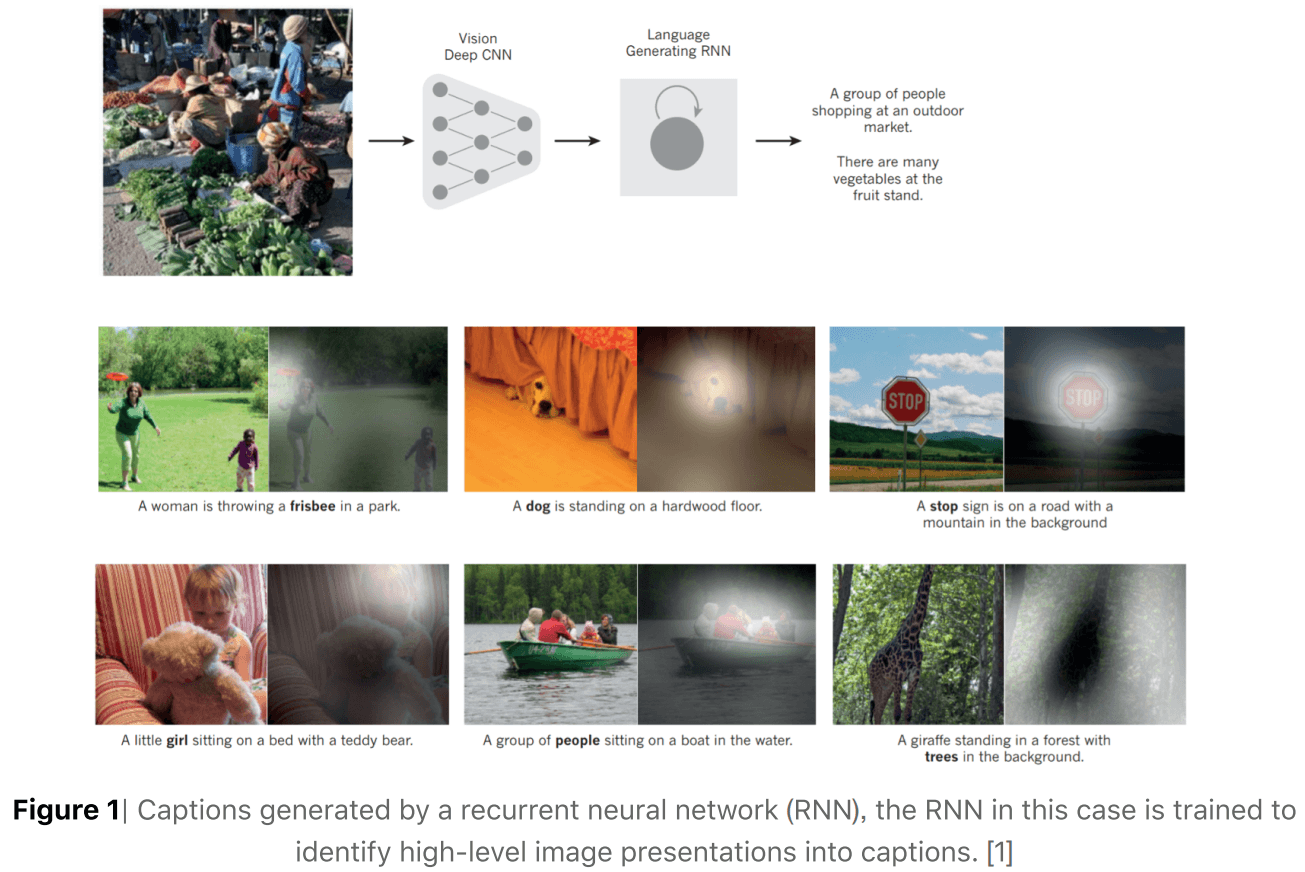

“传统(traditional)”深度学习将不同类型的 feed-forward 模块(通常是CNN) 和 RNN 模块(Recurrent neural networks,包含记忆单元(memory units),如 LSTM[4] 和 MemNN[5]). 这些深度模型的能力被限制为"推理(reason)",例如,进行长链扣除(long chains of deductions) 和简化找寻答案的方法(streamlining a method to land at an answer). 计算中的步骤数量受限于 feedforward 网络中网络层的数量和RNN网络重新收集历史( recollect things )的时间跨度(timespan).

但是,存在“令人生疑(murkiness)”的问题. 当深度模型训练好以后,往往不知道其是如何进行决策(make decisions)的[6]. 这在很多场景中是不可被接受的,不管其是否找出正确答案. 例如,银行利用 AI 评估用户信用,然后决定是否拒绝用户贷款. 在美国很多州,法律规定银行必须解释为什么拒绝用户的贷款. 如果银行采用深度模型进行贷款决策,其贷款部门很可能不能对被拒绝的贷款的原因给出清晰的解释说明.

最重要的是常识(common sense) 问题. 深度学习模型更擅长于感知模式(perceiving patterns),而不能理解模式的含义,也缺少关于模式的原因. 为了加强深度学习模型的解释能力, 不得不改变深度学习模型的结构,以使得模型不是只创建单个的输出(如,图片的可解释性,段落的翻译等),而是得到关于多个输出的整体情况(如,一句文本以不同的方式进行翻译.)这是基于能量(engery)的模型的目的:为每个创建的变量的每一种配置,提供一个分数(score)(give you a score for every conceivable configuration of the variables to be construed.)

深度学习的这些弱点逐渐得到了公众关于 AI 越来越多的关注,尤其是自动驾驶,其利用类似的深度学习策略来对道路导航[7],其往往与生命和死亡密切相关[8]. 公众开始说 AI 可能是存在问题的,尤其是在期望完美的世界和场景中. 即使深度学习已经出现在自动驾驶车辆中,虽然其所造成的伤亡要比人类司机少得多,人类本身也不会完全相信自动驾驶,直到没有任何人员伤亡.

另外,深度学习在目前的形式中是受到绝对限制的,因为它在几乎所有的实际应用场景中[19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32],都是采用基于人类标注数据的监督机器学习的方法,这是一个很大的弱点,导致深度神经网络很难用于输入数据比较稀少的应用场景中. 有必要寻找有效的从“原始(crude)” 非注释数据中准备更广泛的神经网络的方法,以便捕捉真实世界的规律. 结合深度学习与对抗机器学习技术(adversarial machine learning)[17, 18],可能是一种可用的解决方法.

对于普通大众而言,公众很难对深度学习有公平的理解. 如果深度学习只是存在于 AI 实验室中,那将是另外一回事. 然而,深度学习技术现在已经被应用于每个可能的应用场景中. 技术高管和市场营销人员对深度学习技术的信任程度令人担忧. 虽然深度学习是一项令人难以置信的技术,但重要的是不仅要探索深度学习的优势,还要关注深度学习的弱点,以更易于制定行动计划.

Mrinmaya Sachan 在 Towards Literate Artificial Intelligence[33] 中的一项有趣的研究,虽然基于深度学习的 AI 领域的显著发展,但今天的 AI 系统仍然缺乏人类智能(human intelligence)的内在本质. 之后,Mrinmaya 继续深入并反思,在人类开始构建具有人类能力(推理、理解、常识)的 AI 系统之前,为了更彻底的了解和发展真正的智能系统,我们应该如何在这些任务中评估 AI 系统呢?Mrinmaya 的研究提出对 AI 系统进行标准化测试的使用(类似于在正规教育系统中对学生进行的测试),其主要是通过两个框架来进一步开发 AI 系统,其显著的好处在于可以以社会公益和教育的形式进行应用.

对于深度学习和决策,是否对神经网络具有真正的理论理解呢?

On Deep Learning and Decision Making, Do we have a true theoretical understanding of a neural network?

人工神经网络,试图尝试模仿具有众多人工神经元(神经节点,nodes)连接的人类大脑结构,网络自身并不是一个算法,而是一个框架,其可以使得各种机器学习算法在其上运行并实现所需的任务. 神经网络工程的基础几乎完全是基于启发式的,很少强调网络结构的选择;遗憾的是,没有明确的理论解释对于某个模型,如何确定正确数量的神经元. 然而,已经开始有关于神经元数量和模型总体容量的理论工作[12, 13, 14],虽然这些理论工作还很少实用.

斯坦福教授 Sanjeev Arora,采用生动的方法来处理深度神经网络的泛化理论[15],其中他提出了深度学习的泛化之谜为:为什么训练的神经网络能够很好的处理以前未学习(unseen)的数据? 例如,基于 ImageNet 数据集和随机标签,训练深度学习模型,能够得到高精度的结果. 然而,采用可以使推断具有更高泛化能力的常规正则化策略,并没有多大帮助. 不管如何,训练好的神经网络仍然无法预测未学习图片的随机标签,这样反过来说明神经网络并没有泛化.

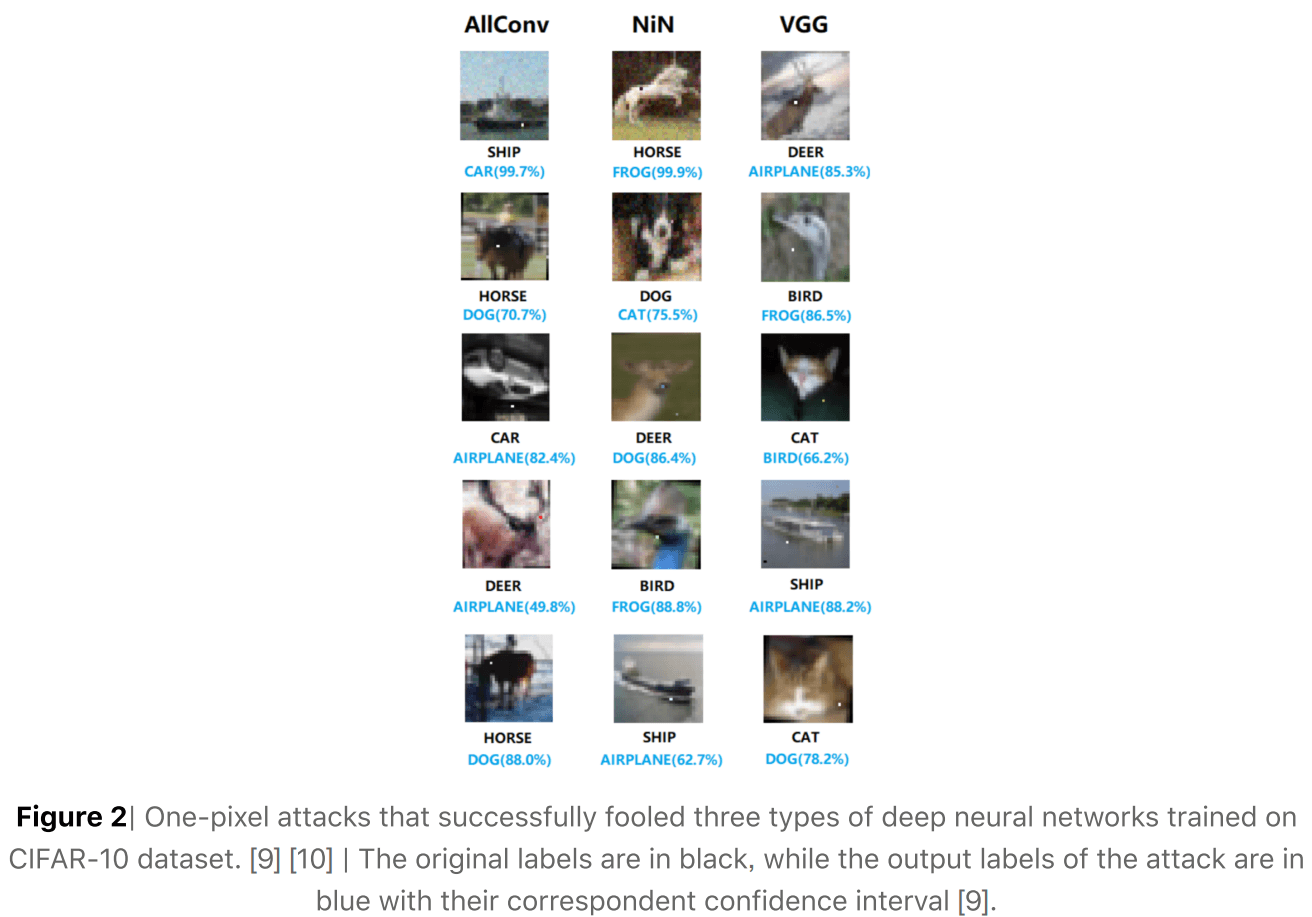

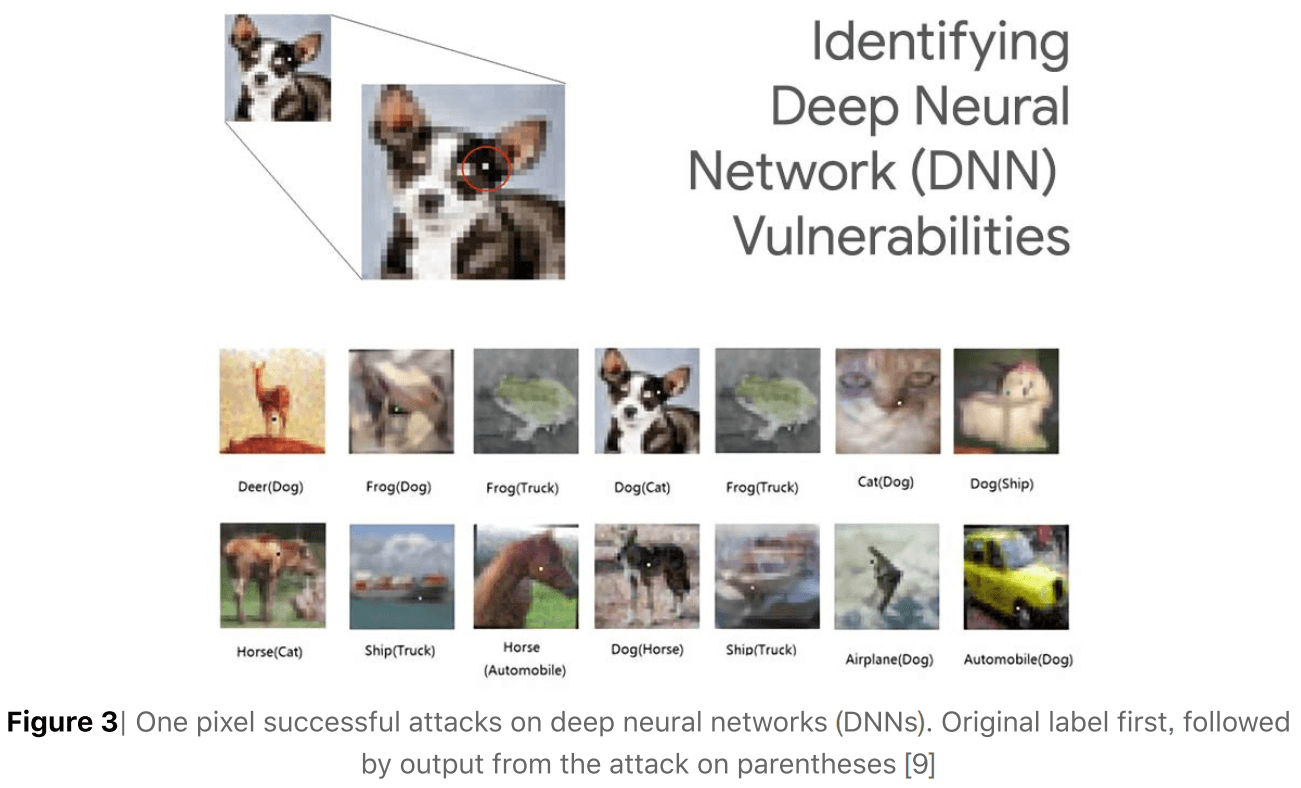

最近,研究人员通过在大规模图像数据集上添加小的细微差别(small nuances)来改变神经网络的模型输出[9],以暴露出深度神经网络结构的漏洞. 该研究得到了与其它几位研究人员相似的结果,输入的细微差别,模型输出并不具有脆弱性(brittleness). 这些类型的结果没有提升信心,例如,在自动驾驶中,环境很容易出现各种细微差别,如雨、雪、雾、阴影、假正例(false positives) 等,均可以想象为一个视觉系统被一个其视觉输入的微小变化所抛弃(thrown off). 可以确信,特斯拉(Tesla)、Uber 以及其它团队已经确定了这些问题,并正在制定解决这些问题的计划,但公众也必须了解这些问题.

如今,我们已经被技术所包围. 从家里的智能小工具,到口袋里的智能手机,再到办公桌上的电脑和连接互联网的路由器等. 在这些技术的每一种中,基础架构能够正常运行,无疑归功于其构建时的坚实的工程原理,包括深度数学、物理、电气、计算机和软件工程等,以及在所有这些领域中,几年,甚至几十年的统计测试和质量保证.

重要的是,要记住,深度学习模型需要大量的数据来训练初始模型(为了得到更高精度的结果而不产生过拟合,切记后续任务可以采用迁移学习). 最终,如果不能够深入理解 "深层神经网络结构"中真正发生的事情,那么,从长远来看,构建适合长时间运行和可持续的技术解决方案,是并不实际且理论上不明智的.

致谢

The author would like to thank Matt Gormley, Assistant Professor at Carnegie Mellon University, and Arthur Chan, Principal Speech Architect, Curator of AIDL.io and Deep Learning Specialist, for constructive criticism in preparation of this article.

相关材料

[1] - Machine Learning Startup Petuum Aims to Industrialize AI

[2] - Contextual Parameter Generation for Neural Machine Translation

[3] - The ABCs of Machine Learning Experts Who Are Driving the World in AI

[4] - Differences Between AI and Machine Learning and Why it Matters

[5] - The 50 Best Public Datasets for Machine Learning.

参考文献

[1] Deep Learning Review| Yann LeCun, Yoshua Bengio, Geoffrey Hinton | http://pages.cs.wisc.edu/~dyer/cs540/handouts/deep-learning-nature2015.pdf

[2] 30 Amazing Applications of Deep Learning | Yaron Hadad | http://www.yaronhadad.com/deep-learning-most-amazing-applications/

[3] Introduction to Deep Learning | Bhiksha Raj | Carnegie Mellon University | http://deeplearning.cs.cmu.edu/

[4] Understanding LSTM Networks | Christopher Olah | http://colah.github.io/posts/2015-08-Understanding-LSTMs/

[5] Memory Augmented Neural-Networks | Facebook AI Research | https://github.com/facebook/MemNN

[6] The Dark Secret at the Heart of Artificial Intelligence | MIT Technology Review | https://www.technologyreview.com/s/604087/the-dark-secret-at-the-heart-of-ai/

[7] MIT 6.S094: Deep Learning for Self-Driving Cars | Massachusetts Institute of Technology | https://selfdrivingcars.mit.edu/

[8] List of Self Driving Car Fatalities | Wikipedia | https://en.wikipedia.org/wiki/List_of_self-driving_car_fatalities

[9] One Pixel Attack for Fooling Deep Neural Networks | Jiawei Su, Danilo Vasconcellos Vargas, Kouichi Sakurai | https://arxiv.org/pdf/1710.08864.pdf

[10] Canadian Institute for Advanced Research Dataset | CIFAR-10 Dataset | https://www.cs.toronto.edu/~kriz/cifar.html

[11] Images, courtesy of Machine Learning Memoirs | https://mlmemoirs.xyz

[12] Deep Neural Network Capacity | Aosen Wang, Hua Zhou, Wenyao Xu, Xin Chen | Arxiv | https://arxiv.org/abs/1708.05029

[13] On Characterizing the Capacity of Neural Networks Using Algebraic Topology | William H. Guss, Ruslan Salakhutdinov | Machine Learning Department, School of Computer Science, Carnegie Mellon University | https://arxiv.org/pdf/1802.04443.pdf

[14] Information Theory, Complexity, and Neural Networks | Yaser S. Abu-Mostafa | California Institute of Technology | http://work.caltech.edu/pub/Abu-Mostafa1989nnet.pdf

[15] Generalization Theory and Deep Nets, An Introduction | Sanjeev Arora | Stanford University | http://www.offconvex.org/2017/12/08/generalization1/

[16] Understanding Deep Learning Requires Re-Thinking Generalization | Chiyuan Zhang, Samy Bengio, Moritz Hardt, Benjamin Recht, Oriol Vinyals | https://arxiv.org/pdf/1611.03530.pdf

[17] The Limitations of Deep Learning in Adversarial Settings | Nicolas Papernot, Patrick McDaniel, Somesh Jha, Matt Fredrikson, Z. Berkay Celik, Ananthram Swami | Proceedings of the 1st IEEE European Symposium on Security and Privacy, IEEE 2016. Saarbrucken, Germany | http://patrickmcdaniel.org/pubs/esp16.pdf

[18] Machine Learning in Adversarial Settings | Patrick McDaniel, Nicolas Papernot, and Z. Berkay Celik | Pennsylvania State University | http://patrickmcdaniel.org/pubs/ieeespmag16.pdf

[19] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems, 2012.

[20] Yaniv Taigman, Ming Yang, Marc’Aurelio Ranzato, and Lior Wolf. Deepface: Closing the gap to humanlevel performance in face verification. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1701–1708, 2014.

[21] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. Advances in Neural Information Processing Systems, 2015.

[22] Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich, et al. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015.

[23] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE international conference on computer vision, pages 1026–1034, 2015.

[24] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 770–778, 2016.

[25] Geoffrey Hinton, Li Deng, Dong Yu, George E Dahl, Abdel-rahman Mohamed, Navdeep Jaitly, Andrew Senior, Vincent Vanhoucke, Patrick Nguyen, Tara N Sainath, et al. Deep neural networks for acoustic modeling in speech recognition: The shared views of four research groups. IEEE Signal Processing Magazine, 29(6):82–97, 2012.

[26] Awni Hannun, Carl Case, Jared Casper, Bryan Catanzaro, Greg Diamos, Erich Elsen, Ryan Prenger, Sanjeev Satheesh, Shubho Sengupta, Adam Coates, et al. Deep speech: Scaling up end-to-end speech recognition. arXiv preprint arXiv:1412.5567, 2014.

[27] Wayne Xiong, Jasha Droppo, Xuedong Huang, Frank Seide, Mike Seltzer, Andreas Stolcke, Dong Yu, and Geoffrey Zweig. Achieving human parity in conversational speech recognition. arXiv preprint arXiv:1610.05256, 2016.

[28] Chung-Cheng Chiu, Tara N Sainath, Yonghui Wu, Rohit Prabhavalkar, Patrick Nguyen, Zhifeng Chen, Anjuli Kannan, Ron J Weiss, Kanishka Rao, Katya Gonina, et al. State-of-the-art speech recognition with sequence-to-sequence models. arXiv preprint arXiv:1712.01769, 2017.

[29] Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio. Neural machine translation by jointly learning to align and translate. In International Conference on Learning Representations, 2015.

[30] Ilya Sutskever, Oriol Vinyals, and Quoc V Le. Sequence to sequence learning with neural networks. In Advances in Neural Information Processing Systems, pages 3104–3112, 2014.

[31] Yonghui Wu, Mike Schuster, Zhifeng Chen, Quoc V Le, Mohammad Norouzi, Wolfgang Macherey, Maxim Krikun, Yuan Cao, Qin Gao, Klaus Macherey, et al. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144, 2016.

[32] Hany Hassan, Anthony Aue, Chang Chen, Vishal Chowdhary, Jonathan Clark, Christian Federmann, Xuedong Huang, Marcin Junczys-Dowmunt, William Lewis, Mu Li, et al. Achieving human parity on automatic chinese to english news translation. arXiv preprint arXiv:1803.05567, 2018.

[33] Mrinmaya Sachan, Towards Literate Artificial Intelligence, Machine Learning Department at Carnegie Mellon University, https://pdfs.semanticscholar.org/25c5/6f52c528112da99d0ae7e559500ef7532d3a.pdf