1. BF 匹配

BF 匹配,Brute-Force Matcher,暴力匹配. 其原理比较简单,首先从集合A中选择一个特征的描述子,然后与集合B中所有的其他特征计算某种相似度,进行匹配,并返回最接近的项.

OpenCV 中,首先使用 cv2.BFMatcher()创建 BFMatcher 实例,其包含两个可选参数:normType 和 crossCheck.

class BFMatcher(DescriptorMatcher):

def create(self, normType=None, crossCheck=None):

"""

normType:如 NORM_L1, NORM_L2, NORM_HAMMING, NORM_HAMMING2.

NORM_L1 和 NORM_L2 更适用于 SIFT 和 SURF 描述子;

NORM_HAMMING 和 ORB、BRISK、BRIEF 一起使用;

NORM_HAMMING2 用于 WTA_K==3或4 的 ORB 描述子.

crossCheck:默认为 False,其寻找每个查询描述子的 k 个最近邻.

若值为 True,则 knnMatch() 算法 k=1,仅返回(i, j)的匹配结果,

即集合A中的第 i 个描述子在集合B中的第 j 个描述子是最佳匹配.

也就是说,两个集合中的两个描述子特征是互相匹配的.

其提供连续的结果.

当有足够的匹配项时,其往往能够找到最佳的匹配结果.

"""

pass实例化 BFMatcher 后,两个重要的方法是 BFMatcher.match() 和 BFMatcher.knnMatch(). 前者仅返回最佳匹配结果,后者返回 k 个最佳匹配结果.

类似于 cv2.drawKeypoints() 画出关键点;cv2.drawMatches() 能够画出匹配结果. 其水平的堆叠两幅图像,并画出第一张图像到第二张图像的点连线,以表征最佳匹配. cv2.drawMatchKnn()能够画出 k 个最佳匹配.

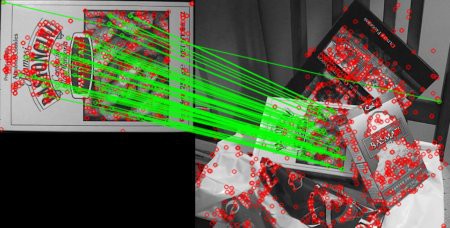

2. 基于ORB描述子的BF匹配示例

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('box.png',0) # queryImage

img2 = cv2.imread('box_in_scene.png',0) # trainImage

# Initiate ORB detector

orb = cv2.ORB()

# find the keypoints and descriptors with SIFT

kp1, des1 = orb.detectAndCompute(img1,None)

kp2, des2 = orb.detectAndCompute(img2,None)

# create BFMatcher object

bf = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=True)

# Match descriptors.

matches = bf.match(des1,des2)

# Sort them in the order of their distance.

matches = sorted(matches, key = lambda x:x.distance)

# Draw first 10 matches.

img3 = cv2.drawMatches(img1,kp1,img2,kp2,matches[:10], flags=2)

plt.imshow(img3)

plt.show()如:

3. Matcher 对象

matches = bf.match(des1,des2) 得到的结果是 DMatch 对象,其具有如下几个属性:

DMatch.distance- 描述子间的距离,值越小越好.DMatch.trainIdx- 训练描述子的索引DMatch.queryIdx- 查询描述子的索引DMatch.imgIdx- 训练图像的索引.

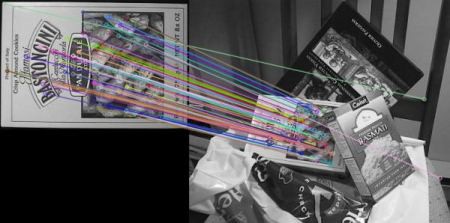

4. SIFT 描述子和 Ratio Test 的 BF 匹配

这里采用 BFMatcher.knnMatch() 得到 k 个最佳匹配. 示例中,设置 k=2,以便于使用 D.Lowe 在论文中的 ratio test.

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('box.png',0) # queryImage

img2 = cv2.imread('box_in_scene.png',0) # trainImage

# Initiate SIFT detector

sift = cv2.SIFT()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# BFMatcher with default params

bf = cv2.BFMatcher()

matches = bf.knnMatch(des1,des2, k=2)

# Apply ratio test

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

# cv2.drawMatchesKnn expects list of lists as matches.

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,good,flags=2)

plt.imshow(img3)

plt.show()如:

5. FLANN 匹配

FLANN,Fast Library for Approximate Nearest Neighbors. 其是针对大规模高维数据集进行快速最近邻搜索的优化算法库.

FLANN Matcher 需要设定两个字典参数,以指定算法和对应的参数,分别为 IndexParams 和 SearchParams.

[1] - IndexParams

使用 SIFT、SURF等描述子,可以传递如下:

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)使用 ORB 描述子是,可以传递如下:

index_params= dict(algorithm = FLANN_INDEX_LSH,

table_number = 6, # 12

key_size = 12, # 20

multi_probe_level = 1) #2[2] - SearchParams

参数指定了索引里的树应该被递归遍历的次数,值越高准确度越高,但计算耗时也更久.

search_params = dict(checks=100)6. SIFT 描述子和 Ratio Test 的 FLANN 匹配

import numpy as np

import cv2

from matplotlib import pyplot as plt

img1 = cv2.imread('box.png',0) # queryImage

img2 = cv2.imread('box_in_scene.png',0) # trainImage

# Initiate SIFT detector

sift = cv2.SIFT()

# find the keypoints and descriptors with SIFT

kp1, des1 = sift.detectAndCompute(img1,None)

kp2, des2 = sift.detectAndCompute(img2,None)

# FLANN parameters

FLANN_INDEX_KDTREE = 0

index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5)

search_params = dict(checks=50) # or pass empty dictionary

flann = cv2.FlannBasedMatcher(index_params,search_params)

matches = flann.knnMatch(des1,des2,k=2)

# Need to draw only good matches, so create a mask

matchesMask = [[0,0] for i in xrange(len(matches))]

# ratio test as per Lowe's paper

for i,(m,n) in enumerate(matches):

if m.distance < 0.7*n.distance:

matchesMask[i]=[1,0]

draw_params = dict(matchColor = (0,255,0),

singlePointColor = (255,0,0),

matchesMask = matchesMask,

flags = 0)

img3 = cv2.drawMatchesKnn(img1,kp1,img2,kp2,matches,None,**draw_params)

plt.imshow(img3)

plt.show()如: