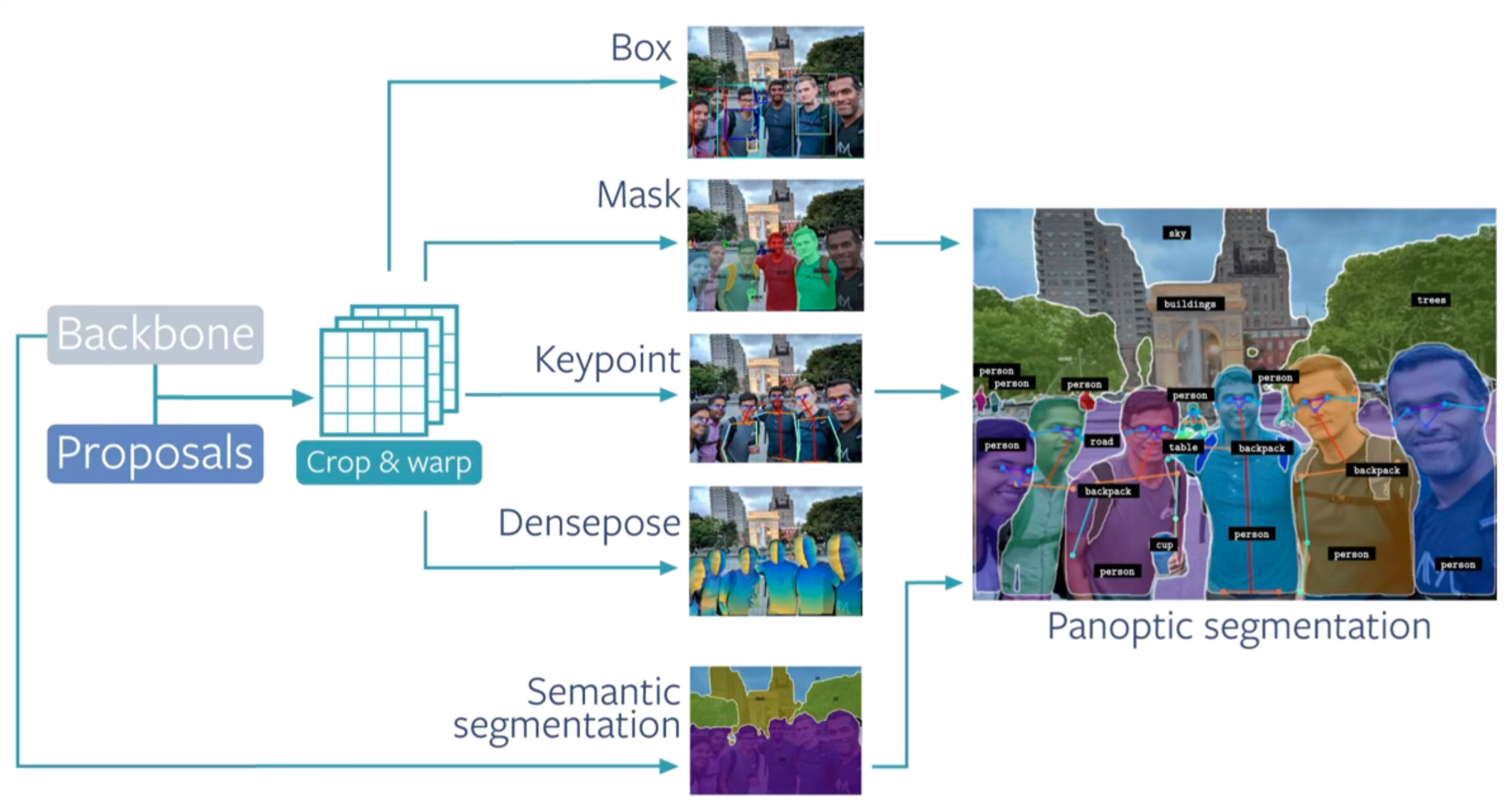

FAIR 继开源了基于Caffe2 的 Detectron 及基于 PyTorch 的 maskrcnn-benchmark 后,又推出了新的基于最新 PyTorch1.3 的目标检测算法的实现.

detectron2 主要特点:

[1] - 基于 PyTorch 深度学习框架.

[2] - 包含更多特性,如全景分割(panoptic segmentation)、densepose、Cascade R-CNN、旋转边界框(rotated bounding boxes) 等等.

[3] - 可以用于作为支持不同项目的库(detectron2 - projects),未来还会开源更多的研究项目.

[4] - 训练速度更快(detectron2 - Benchmarks).

1. detectron2 安装

detectron2 安装说明(需科学上网):

Install detectron2 - Colab Notebook.

也可参考 detectron2 - Dockerfile.

依赖项:

- Python >= 3.6

- PyTorch >=1.4 与对应版本的 torchvision

- OpenCV,用于 demo 及可视化

- fvcore

- pycocotools>=2.0.1

- GCC & G++ >= 5

依赖项安装:

sudo apt-get update

sudo apt-get install build-essential python3-dev

sudo apt-get install libpng-dev libjpeg-dev python3-opencv

sudo apt-get install ca-certificates pkg-config

sudo apt-get install git curl wget automake libtool

#pip

curl -fSsL -O https://bootstrap.pypa.io/get-pip.py

sudo python3 get-pip.py && rm get-pip.py

#opencv

sudo pip install opencv-python

sudo pip install cloudpickle

sudo pip install matplotlib

sudo pip install tabulate

sudo pip install tensorboard

#torch, torchvision

sudo pip install torch torchvision

#fvcore

sudo pip install 'git+https://github.com/facebookresearch/fvcore'

#pycocotools

sudo pip install cython

sudo pip install 'git+https://github.com/cocodataset/cocoapi.git#subdirectory=PythonAPI'

sudo pip install pycocotools1.1. 源码安装

detectron2 安装(Old,GCC & G++ >= 4.9):

git clone git@github.com:facebookresearch/detectron2.git

cd detectron2

python setup.py build developdetectron2 安装(New, GCC & G++ >= 5):

推荐采用 ninja 以快速 build.

# 方式一

python -m pip install 'git+https://github.com/facebookresearch/detectron2.git'

#权限不足时

python -m pip install 'git+https://github.com/facebookresearch/detectron2.git' --user

# 方式二:

git clone https://github.com/facebookresearch/detectron2.git

cd detectron2

python -m pip install -e .

# MacOS

#CC=clang CXX=clang++ python -m pip install ......重新编译,只需删除 rm -f build/ **/*.so ,再重新执行编译. 注:如果 PyTorch 重新安装了,detectron2 往往也需要重新编译.

1.2. 预编译包安装(仅支持 Linux)

如下表,根据对应的环境选择相应的包安装.

| CUDA | torch1.5 | torch1.4 |

|---|---|---|

| 10.2 | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu102/torch1.5/index.html | |

| 10.1 | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.5/index.html | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu101/torch1.4/index.html |

| 10.0 | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu100/torch1.4/index.html | |

| 9.2 | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu92/torch1.5/index.html | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu92/torch1.4/index.html |

| cpu | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cpu/torch1.5/index.html | python -m pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cpu/torch1.4/index.html |

2. detectron2 简单使用

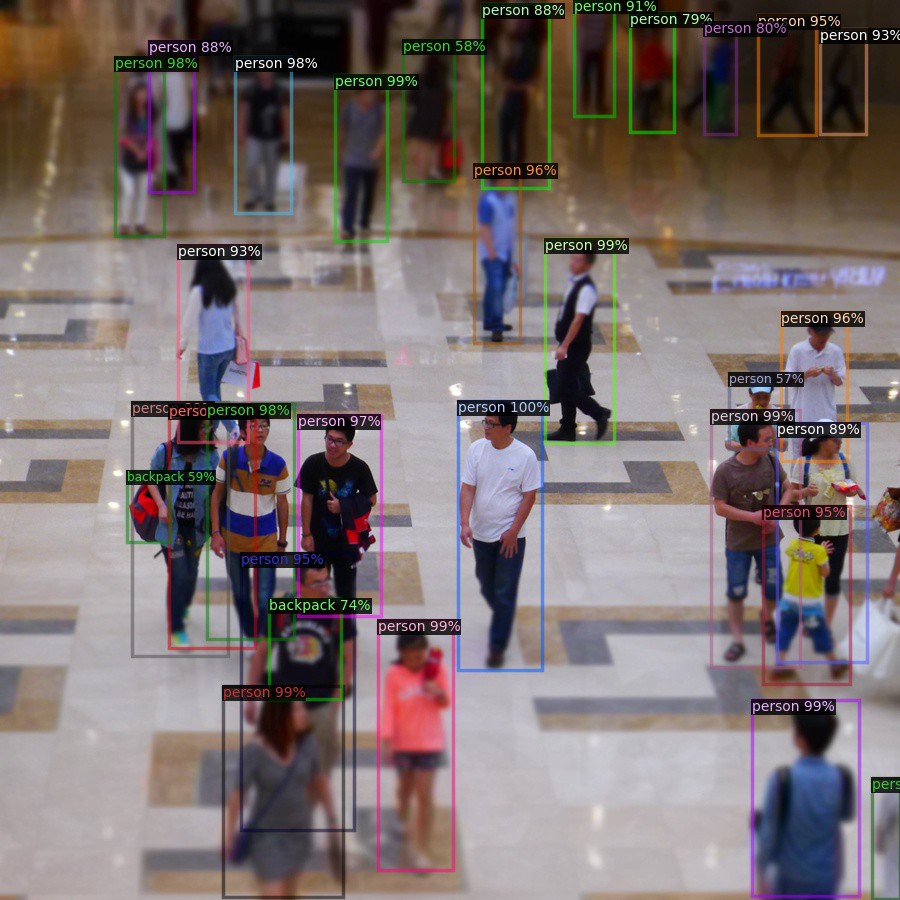

2.1. 目标检测

cd $detectron2_root/

python3 demo/demo.py \

--config-file configs/COCO-Detection/faster_rcnn_R_50_FPN_3x.yaml \

--input input.jpg --output outputs/ \

--opts MODEL.WEIGHTS detectron2://COCO-Detection/faster_rcnn_R_50_FPN_1x/137257794/model_final_b275ba.pkl

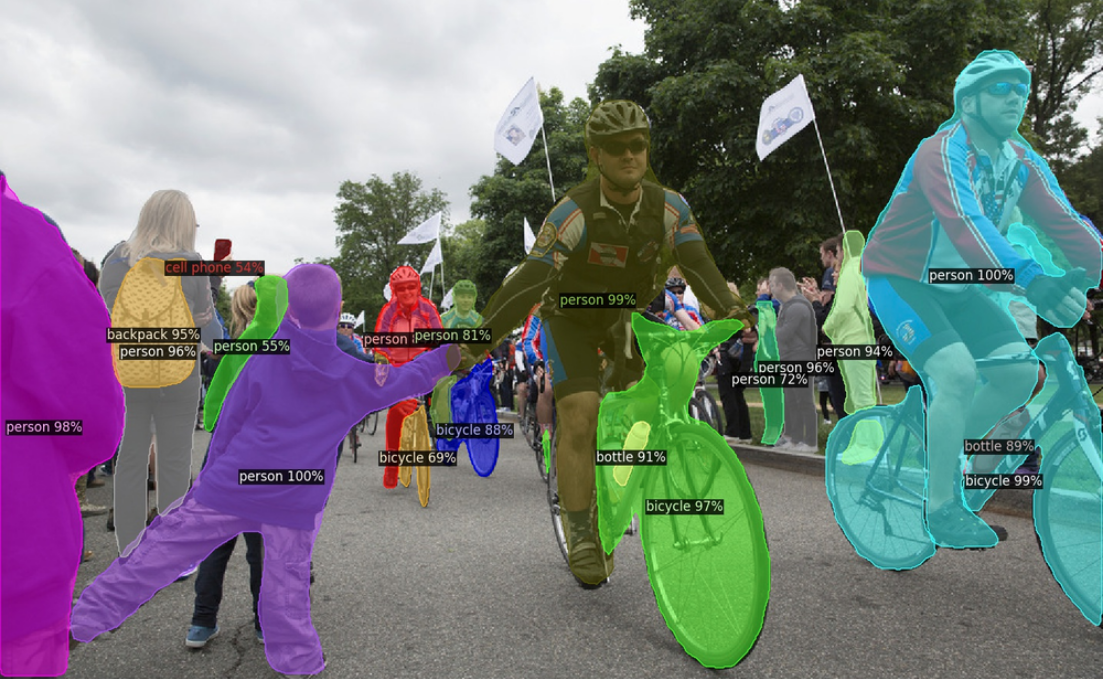

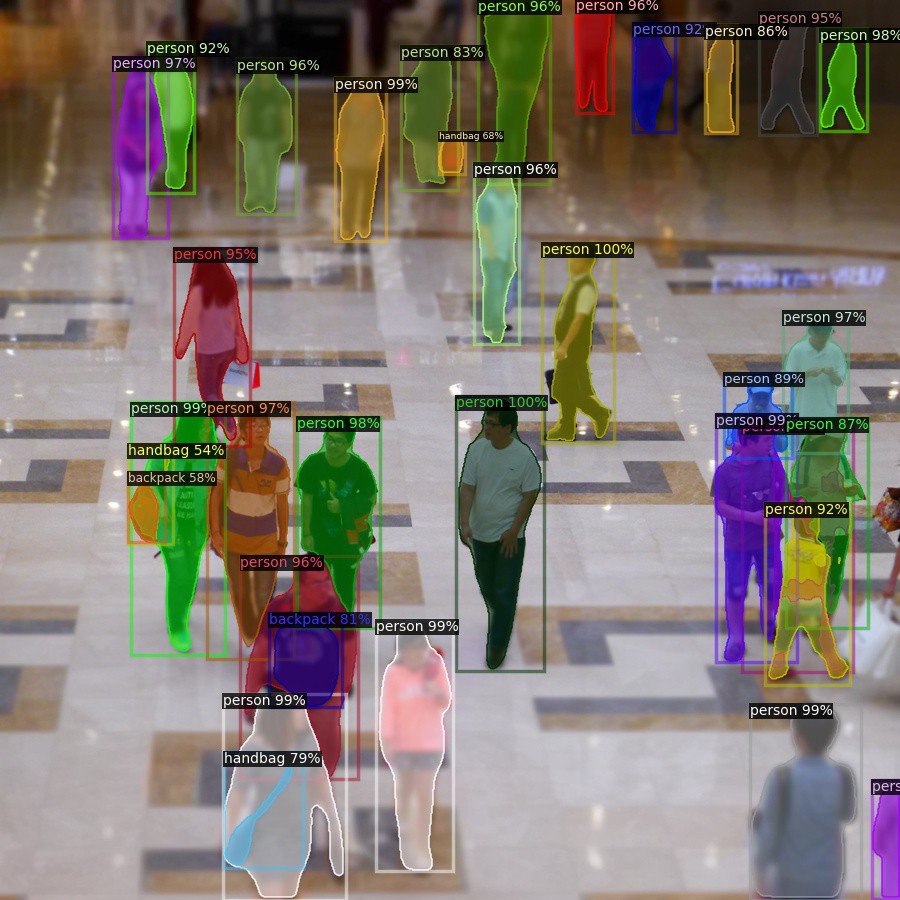

2.2. 实例分割1

cd $detectron2_root/

python3 demo/demo.py \

--config-file configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml \

--input input.jpg --output outputs/ \

--opts MODEL.WEIGHTS detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl

2.3. 实例分割2

示例:

import numpy as np

import cv2

from matplotlib import pyplot

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer

from detectron2.data import MetadataCatalog

# 下载测试图片:

# wget http://images.cocodataset.org/val2017/000000439715.jpg -O input.jpg

im = cv2.imread("./input.jpg")

plt.figure()

plt.imshow(im[:, :, ::-1])

plt.show()

#

cfg = get_cfg()

cfg.merge_from_file("./detectron2_repo/configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5 #模型阈值

cfg.MODEL.WEIGHTS = "detectron2://COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl"

predictor = DefaultPredictor(cfg)

outputs = predictor(im)

#

pred_classes = outputs["instances"].pred_classes

pred_boxes = outputs["instances"].pred_boxes

#在原图上画出检测结果

v = Visualizer(im[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2)

v = v.draw_instance_predictions(outputs["instances"].to("cpu"))

plt.figure(2)

plt.imshow(v.get_image()[:, :, ::-1])

plt.show()如:

2.4. 关键点检测

cfg = get_cfg()

cfg.merge_from_file("./detectron2_repo/configs/COCO-Keypoints/keypoint_rcnn_R_50_FPN_3x.yaml")

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

cfg.MODEL.WEIGHTS = "detectron2://COCO-Keypoints/keypoint_rcnn_R_50_FPN_3x/137849621/model_final_a6e10b.pkl"

predictor = DefaultPredictor(cfg)

outputs = predictor(im)

v = Visualizer(im[:,:,::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2)

v = v.draw_instance_predictions(outputs["instances"].to("cpu"))

plt.imshow(v.get_image()[:, :, ::-1])

plt.show()输出如:

2.5. 全景分割

panoptic segmentation

cfg = get_cfg()

cfg.merge_from_file("./detectron2_repo/configs/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml")

cfg.MODEL.WEIGHTS = "detectron2://COCO-PanopticSegmentation/panoptic_fpn_R_101_3x/139514519/model_final_cafdb1.pkl"

predictor = DefaultPredictor(cfg)

panoptic_seg, segments_info = predictor(im)["panoptic_seg"]

v = Visualizer(im[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2)

v = v.draw_panoptic_seg_predictions(panoptic_seg.to("cpu"), segments_info)

plt.imshow(v.get_image()[:, :, ::-1])

plt.show()输出如:

2.6. 视频全景分割

cd $detectron2_root/

python demo/demo.py \

--config-file configs/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml \

--video-input ../video-clip.mp4 \

--confidence-threshold 0.6 \

--output ../video-output.mkv \

--opts MODEL.WEIGHTS detectron2://COCO-PanopticSegmentation/panoptic_fpn_R_101_3x/139514519/model_final_cafdb1.pkl3. detectron2 Model_ZOO

detectron2 提供了许多在 2019.9月到10月期间训练的 baselines 模型. 其对应的配置文件位于 detectron2_root/configs 路径.

通用设置:

[1] - 训练平台:8 NVIDIA V100 GPUs 的 Big Basin 服务器,采用数据并行(data-parallel) sync SGD 训练,minibatch 为 16 张图片.

[2] - 训练环境:CUDA 9.2, cuDNN 7.4.2 or 7.6.3(cuDNN 版本影响可以忽略).

[3] - 训练曲线:每个模型的训练曲线及其它统计信息位于每个模型的 metrics 路径.

[4] - 默认设置并没有与 Detectron 直接比较. 例如,默认训练数据增强会在水平翻转之外,还采用了尺度抖动(scale jittering). j精度比较 - Detectron1-Comparisons;速度比较 - benchmarks.

[5] - 推断速度估计:tools/train_net.py --eval-only,其中 batchsize=1. 在实际部署中往往会比给定的推断速度更快,因为进行了更多的优化.

[6] - 训练速度对整个训练过程进行了求平均.

[7] - 对于 Faster/Mask R-CNN,提供了三种不同的 backbone 组合:

- FPN - 采用标准 conv 的 ResNet+FPN backbone,再接 FC heads 分别进行 mask 和 box 预测. 其得到了最佳的速度与精度的平衡. 但其它两种仍有研究价值.

- C4 - 采用 ResNet conv4 backbone 和 conv5 head. 其是在 Faster R-CNN论文中采用的 baseline.

- DC5(Dilated-C5) - 采用 ResNet conv5 backbone,其中 conv5 采用了 dilations,其后接标准 conv 和 FC heads 用于 mask 和 box 预测. 这是在 Deformable ConvNet 论文中所采用的.

[8] - 大部分模型时采用 3x schedule 进行训练的(大约 37 个 COCO epochs). 尽管 1x 模型是严重训练不足的,但仍然提供了一些 1x schedule (大约 12 个 COCO epochs) 的 ResNet-50 模型,以用于研究对比.

3.1. ImageNet 预训练模型

在 ImageNet-1k 数据集上得到的 backbone 模型. 其与 Detectron 中所提供的是不同的 - 没有将 BatchNorm 整合进仿射层(do not fuse BatchNorm into an affine layer).

[1] - R-50.pkl: converted copy of MSRA's original ResNet-50 model

[2] - R-101.pkl: converted copy of MSRA's original ResNet-101 model

[3] - X-101-32x8d.pkl: ResNeXt-101-32x8d model trained with Caffe2 at FB

Detectron 中的预训练模型仍被使用的是,如:

[1] - X-152-32x8d-IN5k.pkl: ResNeXt-152-32x8d model trained on ImageNet-5k with Caffe2 at FB (see ResNeXt paper for details on ImageNet-5k).

[2] - R-50-GN.pkl: ResNet-50 with Group Normalization.

[3] - R-101-GN.pkl: ResNet-101 with Group Normalization.

3.2. COCO 目标检测 Baselines

3.2.1. Faster R-NN

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | model id | download |

|---|---|---|---|---|---|---|---|

| R50-C4 | 1x | 0.551 | 0.110 | 4.8 | 35.7 | 137257644 | model & metrics |

| R50-DC5 | 1x | 0.380 | 0.068 | 5.0 | 37.3 | 137847829 | model & metrics |

| R50-FPN | 1x | 0.210 | 0.055 | 3.0 | 37.9 | 137257794 | model & metrics |

| R50-C4 | 3x | 0.543 | 0.110 | 4.8 | 38.4 | 137849393 | model & metrics |

| R50-DC5 | 3x | 0.378 | 0.073 | 5.0 | 39.0 | 137849425 | model & metrics |

| R50-FPN | 3x | 0.209 | 0.047 | 3.0 | 40.2 | 137849458 | model & metrics |

| R101-C4 | 3x | 0.619 | 0.149 | 5.9 | 41.1 | 138204752 | model & metrics |

| R101-DC5 | 3x | 0.452 | 0.082 | 6.1 | 40.6 | 138204841 | model & metrics |

| R101-FPN | 3x | 0.286 | 0.063 | 4.1 | 42.0 | 137851257 | model & metrics |

| X101-FPN | 3x | 0.638 | 0.120 | 6.7 | 43.0 | 139173657 | model & metrics |

3.2.2. RetinaNet

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | model id | download |

|---|---|---|---|---|---|---|---|

| R50 | 1x | 0.200 | 0.062 | 3.9 | 36.5 | 137593951 | model & metrics |

| R50 | 3x | 0.201 | 0.063 | 3.9 | 37.9 | 137849486 | model & metrics |

| R101 | 3x | 0.280 | 0.080 | 5.1 | 39.9 | 138363263 | model & metrics |

3.2.3. RPN & Fast R-CNN

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | prop. AR | model id | download |

|---|---|---|---|---|---|---|---|---|

| RPN R50-C4 | 1x | 0.130 | 0.051 | 1.5 | 51.6 | 137258005 | model & metrics | |

| RPN R50-FPN | 1x | 0.186 | 0.045 | 2.7 | 58.0 | 137258492 | model & metrics | |

| Fast R-CNN R50-FPN | 1x | 0.140 | 0.035 | 2.6 | 37.8 | 137635226 | model & metrics |

3.3. COCO 实例分割 Baselines

Mask R-CNN:

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | mask AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| R50-C4 | 1x | 0.584 | 0.117 | 5.2 | 36.8 | 32.2 | 137259246 | model & metrics |

| R50-DC5 | 1x | 0.471 | 0.074 | 6.5 | 38.3 | 34.2 | 137260150 | model & metrics |

| R50-FPN | 1x | 0.261 | 0.053 | 3.4 | 38.6 | 35.2 | 137260431 | model & metrics |

| R50-C4 | 3x | 0.575 | 0.118 | 5.2 | 39.8 | 34.4 | 137849525 | model & metrics |

| R50-DC5 | 3x | 0.470 | 0.075 | 6.5 | 40.0 | 35.9 | 137849551 | model & metrics |

| R50-FPN | 3x | 0.261 | 0.055 | 3.4 | 41.0 | 37.2 | 137849600 | model & metrics |

| R101-C4 | 3x | 0.652 | 0.155 | 6.3 | 42.6 | 36.7 | 138363239 | model & metrics |

| R101-DC5 | 3x | 0.545 | 0.155 | 7.6 | 41.9 | 37.3 | 138363294 | model & metrics |

| R101-FPN | 3x | 0.340 | 0.070 | 4.6 | 42.9 | 38.6 | 138205316 | model & metrics |

| X101-FPN | 3x | 0.690 | 0.129 | 7.2 | 44.3 | 39.5 | 139653917 | model & metrics |

3.4. COCO 人体关键点检测 Baselines

Keypoint R-CNN:

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | kp. AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| R50-FPN | 1x | 0.315 | 0.083 | 5.0 | 53.6 | 64.0 | 137261548 | model & metrics |

| R50-FPN | 3x | 0.316 | 0.076 | 5.0 | 55.4 | 65.5 | 137849621 | model & metrics |

| R101-FPN | 3x | 0.390 | 0.090 | 6.1 | 56.4 | 66.1 | 138363331 | model & metrics |

| X101-FPN | 3x | 0.738 | 0.142 | 8.7 | 57.3 | 66.0 | 139686956 | model & metrics |

3.5. COCO 全景分割 Baselines

Panoptic FPN:

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | mask AP | PQ | model id | download |

|---|---|---|---|---|---|---|---|---|---|

| R50-FPN | 1x | 0.304 | 0.063 | 4.8 | 37.6 | 34.7 | 39.4 | 139514544 | model & metrics |

| R50-FPN | 3x | 0.302 | 0.063 | 4.8 | 40.0 | 36.5 | 41.5 | 139514569 | model & metrics |

| R101-FPN | 3x | 0.392 | 0.078 | 6.0 | 42.4 | 38.5 | 43.0 | 139514519 | model & metrics |

3.6. LVIS 实例分割 Baselines

Mask R-CNN:(大概训练在 LVISv0.5 数据集训练了 24 个 epochs).

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | mask AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| R50-FPN | 1x | 0.292 | 0.127 | 7.1 | 23.6 | 24.4 | 144219072 | model & metrics |

| R101-FPN | 1x | 0.371 | 0.124 | 7.8 | 25.6 | 25.9 | 144219035 | model & metrics |

| X101-FPN | 1x | 0.712 | 0.166 | 10.2 | 26.7 | 27.1 | 144219108 | model & metrics |

3.7. Cityscapes & Pascal VOC Baselines

| Name | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | box AP50 | mask AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| R50-FPN, Cityscapes | 0.240 | 0.092 | 4.4 | 36.5 | 142423278 | model & metrics | ||

| R50-C4, VOC | 0.537 | 0.086 | 4.8 | 51.9 | 80.3 | 142202221 | model & metrics |

3.8. Deformable Conv 和 Cascade R-CNN

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | mask AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| Baseline R50-FPN | 1x | 0.261 | 0.053 | 3.4 | 38.6 | 35.2 | 137260431 | model & metrics |

| Deformable Conv | 1x | 0.342 | 0.061 | 3.5 | 41.5 | 37.5 | 138602867 | model & metrics |

| Cascade R-CNN | 1x | 0.317 | 0.066 | 4.0 | 42.1 | 36.4 | 138602847 | model & metrics |

| Baseline R50-FPN | 3x | 0.261 | 0.055 | 3.4 | 41.0 | 37.2 | 137849600 | model & metrics |

| Deformable Conv | 3x | 0.349 | 0.066 | 3.5 | 42.7 | 38.5 | 144998336 | model & metrics |

| Cascade R-CNN | 3x | 0.328 | 0.075 | 4.0 | 44.3 | 38.5 | 144998488 | model & metrics |

3.9. 其它设置

不同 normalization 方法:

| Name | lr sched | train time (s/iter) | inference time (s/im) | train mem (GB) | box AP | mask AP | model id | download |

|---|---|---|---|---|---|---|---|---|

| Baseline R50-FPN | 3x | 0.261 | 0.055 | 3.4 | 41.0 | 37.2 | 137849600 | model & metrics |

| SyncBN | 3x | 0.464 | 0.063 | 5.6 | 42.0 | 37.8 | 143915318 | model & metrics |

| GN | 3x | 0.356 | 0.077 | 7.3 | 42.6 | 38.6 | 138602888 | model & metrics |

| GN (scratch) | 3x | 0.400 | 0.077 | 9.8 | 39.9 | 36.6 | 138602908 | model & metrics |

少量训练了非常久得到的非常大的模型,仅用于 demo:

| Name | inference time (s/im) | train mem (GB) | box AP | mask AP | PQ | model id | download |

| Panoptic FPN R101 | 0.123 | 11.4 | 47.4 | 41.3 | 46.1 | 139797668 | model & metrics |

| Mask R-CNN X152 | 0.281 | 15.1 | 49.3 | 43.2 | 18131413 | model & metrics | |

| above + test-time aug. | 51.4 | 45.5 |

4. 模型训练

以默认的 COCO 数据集为例:

8 GPU:

python tools/train_net.py --num-gpus 8 \

--config-file configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml1 GPU:

python tools/train_net.py \

--config-file configs/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_1x.yaml \

SOLVER.IMS_PER_BATCH 2 SOLVER.BASE_LR 0.0025不支持 CPU 训练.

Batchsize 设置遵循: linear learning rate scaling rule.

模型评估:

train_net.py --eval-only详细参数参考:

python tools/train_net.py -h5. 相关材料

[1] - Caffe2 - Detectron 安装

[2] - Caffe2 - Detectron 简单使用

[3] - Caffe2 - Detectron 图片测试结果

[4] - Caffe2 - Detectron 模型训练及数据加载流程

[5] - Github 项目 - maskrcnn-benchmark 简单使用及例示

[6] - Github 项目 - mmdetection 目标检测库

40 comments

博主 detectron2可以提取建筑物轮廓吗 具体怎么操作( ๑´•ω•)

得到建筑物的分割图,取分割图的边缘?

就是用detectron2 mask_rcnn算法 提取遥感图像中建筑物的轮廓

得到 mask,采用类似于 OpenCV cv2.findcontours 可以得到轮廓的吧

有什么方法可以自动提取遥感图像中建筑物?

检测/分割? 传统图像处理或深度学习方法.

博主 ,我想请问下这中间哪些模型是支持视频或者是摄像头的呢 ,文档中有介绍吗?我demo中试了好几个 就只有你这个是可以运行的

模型不区分视频或图片的吧,都是用的 opencv 读取的视频和摄像头

博主你好,我想问一下,在训练全景分割的模型时,我使用了panoptic_fpn_R_101_3x.yaml和R-101.pkl作为预训练模型的参数,但运行下来会报"AttributeError: 'NoneType' object has no attribute 'size'"这样的错误,请问是我的预训练模型配置有误吗?还是说全景分割样本数据集的coco格式与maskrcnn的不同呢?

可能是数据集问题,是不是图片没有读取到?

我昨天调试了一下,确实是数据集不匹配,请问有detectron2训练全景分割模型的案例吗?全景分割的参数和实例分割那些还不太一样。

可以参考下:Panoptic-DeepLab

请问这个使用的是什么数据集呢

COCO数据集

嗯嗯,谢谢

遇到了这个博客里的问题2,一直解决不了啊!求救!

https://blog.csdn.net/m0_37661841/article/details/108318364

是conda和pip环境混着的吗

我全都是用pip安装的

仍然会出现问题吗

请问楼主 AssertionError: Checkpoint detectron2://ImageNetPretrained/MSRA/R-101.pkl not found!

怎么解决

把这个路径替换为本地路径,比如/path/to/ImageNetPretrained/MSRA/R-101.pkl

plt.imshow(im[:, :, ::-1]) 导致TypeError: 'NoneType' object is not subscriptable报错

im 是 None 了吧

博主你好,如果我想从最终的output中提取实例分割的结果保存为目标在图片中的xy坐标要如何提取呢?

从分割结果取最大边界就是目标的上下左右坐标.

这样的话,提取的只是2d的boundingbox,但是我主要是想提取mask的所有点的坐标,不过我找到办法了,用np.where去找mask里面为ture的点的坐标,谢谢博主回复啦。

恩,赞!

楼主我遇到个问题感觉很神奇,不是每次安装都会遇到,报错如下;

g++: error: /home/fpi/Videos/detectron2/build/temp.linux-x86_64-3.6/home/fpi/Videos/detectron2/detectron2/layers/csrc/cuda_version.o: No such file or directory

这个路径我有查看过,权限和路径都没问题,就是有些奇怪。OωO,楼主辛苦帮忙看下是什么原因哈。

确认过cuda了吗? 试试 python -c 'import torch; from torch.utils.cpp_extension import CUDA_HOME; print(torch.cuda.is_available(), CUDA_HOME)'

楼主我想问,怎么使用没有训练过的模型 进行训练自己数据

没有训练过的模型是指 finetune 吗?

现在很多例子,加载的模型都是预训练模,比如我想训练一个实例分割模型,我怎么加载模型,需要的是这个模型是没有预训练过的,就是一个纯的网络模型(例如CNN那样)或者说我下载一个预训练模型,我只要模型,不要他训练好的权重和参数,我该怎么操作,能简单说下吗。感谢楼主

就是从头开始训练,不需要已有的预训练权重,只要网络结构,对吧,这个只需要把加载权重部分的代码注释掉就可以了.

楼主,按照网上的教程安装这个框架,可是测试时总是会遇到The input path(s) was not found"这个问题,我是下载了weight文件,路径也是正确的,为什么总是报错呢?

具体是在哪一处出现的报错问题呢?

博主,detectron得预模型是基于MIT 得ADE20k数据集训练的吗?

应该是基于 COCO 数据集的.

博主,碰到点问题,想请教下,有偿的!我QQ:1521770894,多谢,不吝赐教!

客气了,qq:2258922522 互相交流学习下